Sections

Sections

| Home |

| Dev Log |

| Resume |

| Free Media |

| Riddles |

| Bob's Gallery |

| Links & Resources |

| Contact |

Projects

Projects

| Stem |

| Nyx |

| Kana Quizzer |

| Keybinding Util |

| Model Gallery |

| Applets |

| Nexus |

Applets

Applets

| Contents |

| Mr. Jelly 2D |

| Mr. Jelly 3D |

| Toroidal Spirals |

| 3D Tetris |

| Tetris AI |

| Bullies |

| Bullies AI |

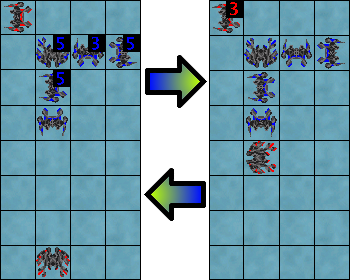

Bullies AI

The rules of the bullies game were chosen to see if a heuristic search would take advantage of teamwork to be effective. This section discusses how the AI works, the problems it encounters, and some thoughts for how it could be improved. A sample game between two computer players at the minimax depth of three can be found here (search logs and images of the board state).

The AI is split into two searches, one to find the local maxima or minima for a turn and an adversarial search to take into account the opponent's actions. The former is more problematic in that the search space is intractable due to the factorial time complexity of piece movement and the need to consider ordering.

The current AI implements Beam Search with the width of fifty and maximum depth of ten to make the search brief enough that it would be acceptable to a human opponent. However, the game does not lend itself well to Beam Search- most local maxima plateau with multiple similar board configurations that prevents the search from exploring less promising branches. The consequence was that the AI absolutely refused to leave safety to finish off an opponent, and the AI reaches stalemate when playing against itself. A promising method of fixing this is to discard results with the same heuristic as the Beam Search's current contents. This seems to make the AI more aggressive near the edge but less willing to explore options in larger boards. For now this is being left out.

The adversarial search component is handled by minimax with alpha-beta pruning with the branching factor limited to five. However, even with a modest number of pieces the search depth of three takes a couple of minutes, making it unreasonable when playing humans. Hence the game defaults to the depth of one (ie, no minimax search).

Perhaps the simplest area the AI could be improved is the heuristic. The search currently takes the following factors into account (in order):

- Number of pieces each player has.

- Number of pieces in danger (within shoving distance of the edge).

- Proximity of the piece's mean distance from the center.

- Clustering of pieces (favoring that pieces keep close proximity with their neighbors).

Queens are weighted heavier than drones in all cases. A couple of other helpful considerations would be the queen's distance from opposing drones and favoring the use of drones as blockers to keep the queen from being shoved far.

Human players tend to look at objectives (such as "wipe out the opponent's queen") and then look for methods of accomplishing it. I'd hoped that that with the computer's ability to check numerous possibilities that forward chaining would be effective, but backward chaining might be worth considering.

While the computer opponent shows some promise, it's not yet particularly challenging. While humanity may someday bow to its robot masters, I sincerely doubt this AI will be it. Cest la vi.

|